RBAR Performance in PowerShell

Years ago before I learned PowerShell, I was using T-SQL to do things it just wasn't meant to do, namely SQL Server estate management. T-SQL is incredibly efficient when managing data, but it's not very efficient when managing SQL Server instances.

For example, say you need to perform a task against each database. The way most people do this in T-SQL is by calling the undocumented and unsupported stored procedure sp_msforeachdb. As T-SQL is optimized to work with sets or batches of data, it's also not optimized to process that foreach part of sp_msforeachdb, which the SQL Server community fondly calls row-by-agonizing-row.

PowerShell, on the other hand, was pretty much built to process objects/data/results row-by-awesome-row. There's a number of ways to do it, too.

My favorite of these methods is the foreach (x in y) because it's easy to read and easy to understand. It's also one of the fastest methods, which I was excited to see.

The benchmarks

In the code block below, I use the benchmark performance module, benchpress, to test out how fast PowerShell can process real-world data using various looping methods. There are 18200+ files in the collection, which was built from getting a list of all the files within C:\Windows\System32 on Windows and /usr on macOS.

1$files = Get-ChildItem C:\windows\system32 -Recurse

2

3Measure-Benchmark -RepeatCount 25 -Technique @{

4 ForEachObject = {

5 $files | ForEach-Object -Process {

6 $null = $PSItem.Name

7 }

8 }

9

10 foreach = {

11 foreach ($file in $files) {

12 $null = $file.Name

13 }

14 }

15

16 for = {

17 for ($i=0; $i -lt $files.Length; $i++) {

18 $null = $files[$i].Name

19 }

20 }

21

22 dowhile = {

23 $i = 0

24 do {

25 $null = $files[$i].Name

26 $i++

27 } while ($i -lt $files.Length)

28 }

29

30 dountil = {

31 $i = 0

32 do {

33 $i++

34 $null = $files[$i].Name

35 } until ($i -eq $files.Length)

36 }

37

38 foreachMethod = {

39 $files.foreach({ $null = $PSItem.Name })

40 }

41

42 GetEnumerator = {

43 $file = $files.GetEnumerator()

44 while ($file.MoveNext())

45 {

46 $null = $file.Name

47 }

48 }

49}

I re-ran the benchmark a bunch of times on a couple different platforms and ultimately, it suggested that foreach is the fastest approach in PowerShell! The same is not true for C#, oddly.

📝 Check out James O'Neill's explanation in the comments below to see how my testing methods slowed down ForEach-Object. Ultimately, ForEach-Object would still be the slowest but it's possible to make it faster by not setting the $files variable first.

Results

Here, I processed 18k objects on Windows PowerShell 5.1 on an Intel NUC i7.

1Technique Time RelativeSpeed Throughput

2--------- ---- ------------- ----------

3foreach 00:00:00.307370 1x 81.33/s

4GetEnumerator 00:00:00.315592 1.03x 79.22/s

5dowhile 00:00:00.741708 2.41x 33.71/s

6dountil 00:00:00.752610 2.45x 33.22/s

7for 00:00:00.754709 2.46x 33.13/s

8foreachMethod 00:00:01.281173 4.17x 19.51/s

9ForEachObject 00:00:04.080342 13.27x 6.13/s

And on the same machine, using PowerShell 7.2.

1Technique Time RelativeSpeed Throughput

2--------- ---- ------------- ----------

3foreach 00:00:00.206436 1x 121.1/s

4GetEnumerator 00:00:00.244730 1.19x 102.15/s

5dountil 00:00:00.486118 2.35x 51.43/s

6for 00:00:00.486775 2.36x 51.36/s

7dowhile 00:00:00.548534 2.66x 45.58/s

8foreachMethod 00:00:00.750939 3.64x 33.29/s

9ForEachObject 00:00:01.649981 7.99x 15.15/s

Pretty impressive! This is what it looked like on macOS, also running PowerShell 7.2. The dataset was limited to the exact number of files on the Windows machine, using the Select-Object -First filter.

1Technique Time RelativeSpeed Throughput

2--------- ---- ------------- ----------

3foreach 00:00:00.211701 1x 118.09/s

4GetEnumerator 00:00:00.284063 1.34x 88.01/s

5dowhile 00:00:00.578216 2.73x 43.24/s

6for 00:00:00.582597 2.75x 42.91/s

7dountil 00:00:00.585238 2.76x 42.72/s

8foreachMethod 00:00:01.155784 5.46x 21.63/s

9ForEachObject 00:00:02.774726 13.11x 9.01/s

I like foreach because in addition to use it being easy to read, it also feels natural to me to read "for each file in files.."

If you want, you can also use the technique below which doesn't save the output of Get-ChildItem to a variable or consume additional memory.

1foreach ($file in ,(Get-ChildItem)) {

2 $null = $file.Name

3}

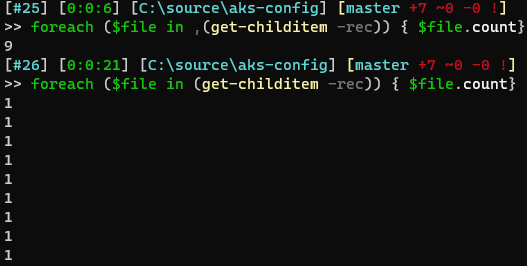

If I correctly interpreted Bruce Payette's article, Array literals in PowerShell, that comma operator before the Get-ChildItem collection constructs an array with just one member which makes everything blazing fast.

This is because, as Chris Gardner explained to me, when you put that comma there, the pipe will not unroll your result set for you. It's sent in one giant resultset.

It'll pull in the csv first and then call the Write-DbaDbTableData command with a single array. The pipeline unrolls it for you, if you don't use it then it'll process the whole full array. How that array is processed depends on the command, some will do a foreach inside to process them one at a time and others will do something with the full array.

This can be best seen in this screenshot he sent to clarify.

Speeding up piped input

One of the commands can greatly benefit from this comma operator is Write-DbaDbTableData, especially when processing large amounts of data. Behind the scenes, Write-DbaDbTableData takes a data set and imports it to SQL Server using the SqlBulkCopy (BULK INSERT) method.

As a PowerShell user, you may initially think that this is an ideal way to perform the import:

1Import-Csv -Path C:\temp\cars.csv |

2 Write-DbaDbTableData -SqlInstance sql01 -Database tempdb -Table cars -AutoCreateTable

But in the code above, the results of Import-Csv are actually piped to Write-DbaDbTableData, row-by-agonizing-row. The reason this method would be agonizing is because Write-DbaDbTableData executes once for every single row. If you have 1500 rows in your CSV, Write-DbaDbTableData will build a SqlBulkImport object and insert one little row, 1500 times total. I imagine this is even slower than doing a regular ol' INSERT, which is often wildly slower than BULK INSERT for bulk imports.

The way we can make this a ton faster is to ensure that the InputObject (the parameter that accepts the actual piped input) is given one big dataset instead of 1500 small ones.

The first way that comes to mind for me is to assign the CSV output to a variable, then passing it to the InputObject parameter.

1$csv = Import-Csv -Path C:\temp\cars.csv

2Write-DbaDbTableData -SqlInstance sql01 -Database tempdb -Table cars -AutoCreateTable -InputObject $csv

Or you may even consider using parens to wrap your CSV import so that it excutes entirely before being passed through the pipeline.

1(Import-Csv -Path C:\temp\cars.csv) |

2 Write-DbaDbTableData -SqlInstance sql01 -Database tempdb -Table cars -AutoCreateTable

3

4# Which seems similar to...

5

6Write-DbaDbTableData -SqlInstance sql01 -Database tempdb -Table cars -AutoCreateTable -InputObject (Import-Csv -Path C:\temp\cars.csv)

The downside to both of these methods is that your RAM may get maxed out if your CSV is large enough, because all of that rich, object-oriented data is being stored in memory.

An alternative, as was mentioned earlier, is to use the comma operator and pipe that to Write-DbaDbTableData.

1,(Import-Csv -Path C:\temp\cars.csv) |

2 Write-DbaDbTableData -SqlInstance sql01 -Database tempdb -Table cars -AutoCreateTable

This is even faster than using the InputObject parameter and doesn't use memory! No idea how that works 😆. Shoutout to Aaron Nelson for introducing me to this magic!

More benchmarks

Let's take a look at the benchmarks for the piping methods above. Oh, but before we do, I want to highlight that the CommaOperator approach far outperforms the others when the benchark is run just once. When the benchmark operation is repeated like 10-25 times, suddenly SaveToVariableFirst outpeforms everything else. I'm assuming that's because my disks have caching built-in.

1Measure-Benchmark -RepeatCount 25 -Technique @{

2 NoOptimization = {

3 Import-Csv -Path C:\temp\cars.csv |

4 Write-DbaDbTableData -SqlInstance sql01 -Database tempdb -Table cars -AutoCreateTable

5 }

6

7 WrappedNoOptimization = {

8 (Import-Csv -Path C:\temp\cars.csv) |

9 Write-DbaDbTableData -SqlInstance sql01 -Database tempdb -Table cars -AutoCreateTable

10 }

11

12 SaveToVariableFirst = {

13 $csv = Import-Csv -Path C:\temp\cars.csv

14 Write-DbaDbTableData -SqlInstance sql01 -Database tempdb -Table cars -AutoCreateTable -InputObject $csv

15 }

16

17 WrappedInputObject = {

18 Write-DbaDbTableData -SqlInstance sql01 -Database tempdb -Table cars -AutoCreateTable -InputObject (Import-Csv -Path C:\temp\cars.csv)

19 }

20

21 CommaOperator = {

22 ,(Import-Csv -Path C:\temp\cars.csv) |

23 Write-DbaDbTableData -SqlInstance sql01 -Database tempdb -Table cars -AutoCreateTable

24 }

25}

So here are the results when the script blocks are run just once:

1Technique Time RelativeSpeed Throughput

2--------- ---- ------------- ----------

3SaveToVariableFirst 00:00:00.430374 1x 2.32/s

4CommaOperator 00:00:00.472427 1.1x 2.12/s

5WrappedInputObject 00:00:00.542130 1.26x 1.84/s

6NoOptimization 00:00:00.846065 1.97x 1.18/s

7WrappedNoOptimization 00:00:00.846338 1.97x 1.18/s

And then when they're ran 25x in a row:

1Technique Time RelativeSpeed Throughput

2--------- ---- ------------- ----------

3SaveToVariableFirst 00:00:12.854821 1x 1.94/s

4CommaOperator 00:00:13.194680 1.03x 1.89/s

5WrappedInputObject 00:00:13.500615 1.05x 1.85/s

6WrappedNoOptimization 00:00:23.450838 1.82x 1.07/s

7NoOptimization 00:00:25.608213 1.99x 0.98/s

Seems safe to say that CommaOperator is the most efficient way all of the time and a solidly fast way most of the time.

Other resources

If you like performance posts, check out Stephen Owen's classic, Coding for Speed. It's one of my all-time favorites and a post I still refer to regularly in my own coding techniques.

Tobias Weltner's post, Speeding up the Pipeline, is also super helpful in better understanding the pipeline.

And James O'Neill's PowerShell Arrays, performance and [not] sweating the small stuff. is anohtr great one!