PDF Text to SQL Data: Using OpenAI's Structured Output with PSOpenAI

Incredible! I just used PowerShell and OpenAI to import text from a PDF right into SQL Server 🚀 AI's usefulness is often debated but this is such a great use-case. I also see it being useful for automatically adding SharePoint document metadata and cleaning dirty data.

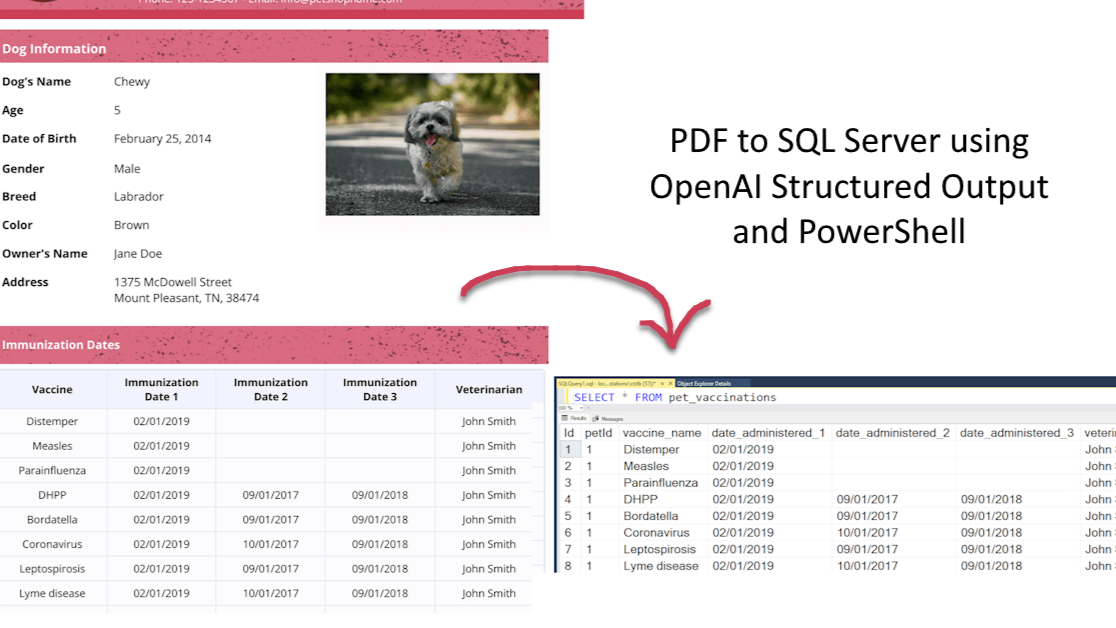

Using a new feature from OpenAI called "Structured Outputs", I was able to extract clean immunization data from a PDF and insert it into a database.

For this proof of concept, I used sample data from a vet clinic instead of real data. Although the image mislabels the dog's breed, it serves as an ideal example. Here's the basic process:

1. Create a schema for the text/data in the PDF

I created a JSON schema based on the data I wanted to extract from the vaccine record.

2. Extract the text from the PDF and convert it into markdown

While various tools can be used, I used the OpenAI API to extract all the data from the file into markdown format.

3. Submit the exported text and schema to OpenAI for parsing

I used PSOpenAI, following their guide on Structured Outputs.

4. Convert the resulting JSON and insert into a database

This step involves using PowerShell to process the JSON output and insert it into SQL Server.

When discussing AI, people often ask about its accuracy. I've had conversations with coworkers about this, and we all agree that AI isn't perfect, but neither are humans who can be tired, bored, annoyed, or unable to understand instructions. One of my coworkers who worked at a lab even saw PhDs struggling to properly categorize data. Ultimately, AI can be frustrating at times, I believe it's a solid solution in its current state. Considering the advancements made in just the past year, I'm excited to see how it will evolve in the coming years.

Let's look at OpenAI's current method, step-by-step.

Creating the schema

Take a closer look at the actual PDF that I used for my testing.

As a DBA, I could immediately see this data would need to be normalized (put into multiple tables to reduce duplication) but my primary goal was to keep this simple, so I decided on two tables: pet and pet_vaccinations

Here's what the JSON would look like, as prescribed by OpenAI's documentation and blog post.

1{

2 "name": "pet",

3 "strict": true,

4 "schema": {

5 "type": "object",

6 "properties": {

7 "pet_name": {

8 "type": "string",

9 "description": "What is the name of the pet?"

10 },

11 "owner_name": {

12 "type": "string",

13 "description": "What is the name of the pet's owner?"

14 },

15 "pet_breed": {

16 "type": "string",

17 "description": "What is the breed of the pet?"

18 },

19 "vaccinations": {

20 "type": "array",

21 "items": {

22 "type": "object",

23 "properties": {

24 "vaccine_name": {

25 "type": "string",

26 "description": "What is the name of the vaccine administered?"

27 },

28 "date_administered_1": {

29 "type": "string",

30 "description": "When was the first dose of the vaccine administered?"

31 },

32 "date_administered_2": {

33 "type": "string",

34 "description": "When was the second dose of the vaccine administered?"

35 },

36 "date_administered_3": {

37 "type": "string",

38 "description": "When was the third dose of the vaccine administered?"

39 },

40 "veterinarian": {

41 "type": "string",

42 "description": "Who is the veterinarian that administered the first dose?"

43 }

44 },

45 "required": [

46 "vaccine_name",

47 "date_administered_1",

48 "date_administered_2",

49 "date_administered_3",

50 "veterinarian"

51 ],

52 "additionalProperties": false

53 },

54 "description": "What vaccinations were given to the pet, including the vaccine names, administration dates, and veterinarian names?"

55 }

56 },

57 "required": [

58 "pet_name",

59 "owner_name",

60 "pet_breed",

61 "vaccinations"

62 ],

63 "additionalProperties": false

64 }

65}

What I love about this JSON is that it's the same questions we'd ask ourselves if we were looking at the PDF as a data source. It's all natural language and you can even give it instructions like "If the dog's name is Chewy and it lists the breed as labrador, say the breed is Shih Tzu."

The importer I created in dbatools.ai, Import-DbaiFile, creates a new table, along with relationships, when it encounters an array. So in the case of the above JSON, it'll create an addition table for vaccinations.

I created all of that JSON using ChatGPT. Initially, ChatGPT pretended it knew how to create well-formed JSON for Structured Outputs but all the output failed. So I attached OpenAI's blog post and the docs page and it fixed itself after two iterations.

One thing I noticed is the more specific you are, the more accurate the structured data becomes -- ChatGPT actually told me this when I was looking for a solution to missing data. So if I asked for each vaccination and their dates in plain-text on one line, it would miss some of the second or third immunization dates. But when I made it vaccination, date_1, date_2, and date_3, it was accurate.

Function Calling vs Structured Outputs

Function Calling waits for return calls:

- Client: here's the schema, give me SQL

- API: Here's SQL run it and give me the results

- Client: here's the results: "360"

- API: "There were 350 sales in 1996"

Whereas Structured Outputs are like "True or false: Pacific Palisades is related to Los Angeles".

Extracting the markdown

When providing the content you want to become a structured object, you can pick plain text or various other formats, I imagine. I picked markdown because it's well-structured.

You can extract this text using any OCR tool, but I decided to use OpenAI itself in the dbatools.ai command, ConvertTo-DbaiMarkdown. The instructions I had to write to get what I needed was suprisingly detailed and I look forward to the day that "extract this entire PDF to markdown" will work.

I also added a couple retries in there. Sometimes it hallucinates or gives partial data and yes it's frustrating but it's WAY more fustrating to categorize hundreds of PDF records manually 😇 Of course, you can extract text locally which will save you tokens and time. For that, you can use tools like:

Convert-PDFToTextusing thePSWritePDFmodule in PowerShellPyPDF2orpdfminerin PythonPDFBoxoriTextin Javapdf-parseorpdf-extractin Node.js

I needed OCR capabilties so I just chose to use OpenAI. Microsoft AI Services also provides OCR services and Google too.

Implementing Structured Outputs with PSOpenAI

Now, let's combine everything using my preferred OpenAI module, PSOpenAI. PSOpenAI is updated often and added support for Structured Outputs a few days after it was released. Here's how you can use this feature:

1# Load the JSON schema

2$jsonSchema = Get-Content -Path $home/Downloads/immunization.json -Raw

3

4# Set up the parameters for the chat completion and make the call

5$params = @{

6 Model = "gpt-4o-2024-08-06"

7 SystemMessage = "You are an assistant that extracts information from pet vaccination records."

8 Message = $markdown

9 Format = "json_schema"

10 JsonSchema = $jsonSchema

11}

12$result = Request-ChatCompletion @params

13

14# Convert result to an object

15$result.Answer | ConvertFrom-Json

This method has a few benefits:

- Data Integrity: All fields are present and formatted correctly.

- Consistency: You get the same data structure every time.

- Flexibility: The JSON schema adapts easily to different documents or data needs.

- Simplicity: The AI handles complex content extraction, reducing your parsing work.

You can also use it to extract schema definitions from documentation, parse error and build logs, or analyze audit reports. Dev could use it pull data from code comments, or extract API specifications from technical docs. The more I write, the cooler it gets.

Try It Yourself

If you use PowerShell and have an OpenAI API key , you can copy/paste this code to see it work.

The PDF to SQL Data Pipeline

The structured data can then be written to SQL Server with code that goes something like the code below. In my own implementation, I used Invoke-DbaQuery because it gives me more control. Also, Write-DbaDbTableData is good for bulk imports and it's unlikely the parsed markdown will be large enough to make it useful.

1# Assuming you've already extracted the data and have it in $structuredOutput

2$parms = @{

3 SqlInstance = "localhost"

4 Database = "tempdb"

5 Schema = "dbo"

6}

7

8# Convert the JSON to a PowerShell object

9$data = $structuredOutput | ConvertFrom-Json

10

11# Write the main vaccination record to SQL

12$data |

13 Select-Object pet_name, owner_name, pet_breed |

14 Write-DbaDbTableData @parms -TableName "VaccinationRecords" -AutoCreateTable

15

16# Write the vaccination details to SQL

17# This method isn't so fast but gives you an idea

18foreach ($vaccination in $data.vaccinations) {

19 # Add pet_name and owner_name to each vaccination record

20 $null = $vaccination | Add-Member -MemberType NoteProperty -Name pet_name -Value $data.pet_name

21 $null = $vaccination | Add-Member -MemberType NoteProperty -Name owner_name -Value $data.owner_name

22

23 # Write to the VaccinationDetails table

24 $vaccination | Write-DbaDbTableData @parms -TableName VaccinationDetails -AutoCreateTable

25}

With this approach, we've created an efficient pipeline:

- Extract text from a PDF using an OpenAI Assistant

- Structure that data using Structured Outputs

- Pipe the structured JSON directly into SQL Server tables

And to make things even more efficient, it can be wrapped up into a combo command like I did with Import-DbaiFile.

1$params = @{

2 Path = "C:\Logs\user_activity.log"

3 JsonSchemaPath = "C:\Schemas\activity_log_schema.json"

4 SqlInstance = "auditdb01"

5 Database = "compliance_logs"

6 Schema = "dbo"

7 SystemMessage = "Extract and validate user activity logs for compliance"

8}

9Import-DbaiFile @params

In this example, the activity_log_schema.json is used to structure the free-text in user_activity.log. Then, that structure is written to the compliance_logs database on auditdb01. Cool suggestion from ChatGPT for that example. I wonder if it could be given audit guidelines and help set a true/false bit for if a machine or activity is in compliance.

One thing you'll notice is that there's a bit of latency for this entire operation (vet pdf to markdown to structured object takes about 45 seconds). I'm certain this will speed up in the future and I'm not worried about it.

By the way, OpenAI is not the only organization to create something like this. It seems pretty common and you can even do it locally. I'll be taking a look at that, maybe next.

Functionality like Structured Outputs further convinces me that once organizations are on board with AI and AI is a wee-bit more reliable, this has got to be the future.

This post is part of my AI Integration for Automation Engineers series. If you found it useful, check out these other posts:

- AI Automation with AI Toolkit for VS Code and GitHub Models: A visual guide

- Getting Started with AI for PowerShell Developers: PSOpenAI and GitHub Models

- Asking Tiny Questions: Local LLMs for PowerShell Devs

- Local Models Mildly Demystified

- Asking Bigger Questions: Remote LLMs for Automation Engineers

- PDF Text to SQL Data: Using OpenAI's Structured Output with PSOpenAI

- Automating Document Classification in SharePoint with Power Platform and AI

- Document Intelligence with Azure Functions: A Practical Implementation

And as always, if you're interested in diving deeper into these topics, my upcoming AI book covers everything from local models to advanced automation techniques. Use code gaipbl45 for 45% off.