Aider AI, the command-line code assistant, is phenomenal

I'm currently working on the final chapter of our book! It's the DevOps chapter, which for a while, I thought would probably be about LLMOps or GenAIOps. But I haven't found a way to apply those to me as a DevOps person.

After reading Chapter 2 which covers Code Assistants, my coauthor and BFF Brandon suggested we show Aider. Aider is like GitHub Copilot for the command line. I spent a little more time learning it and holy shit, it finally made everything come together.

I kept hearing about how "AI can help with automation" and I'm like HOW though? I understand APIs and I understand automation but how in the world would we do total automation? and now I FINALLY GET IT. Let me give you the scenario that made it click.

Aider for Pester v4 to v5 migration

After Brandon suggested I abandon talking about Prompty and PromptFlow in favor of Aider, I still didn't write for a few days. I kept waiting for my brain to come up with the perfect scenario and boy did it ever, thanks to some chatter in the #dbatools-dev channel on Slack.

For the past 5 years, we have been talking about migrating from Pester v4 to Pester v5. The downside to having an amazing, huge test suite which gives maintainers, contributors and users confidence that their commands will work, is that when you have to make foundational changes, you have to make changes to alllllll 700+ files.

This is where Aider comes in. I'd love to write a huge blog post about it, and I will once we're finished with the book but let me give you a general idea using code:

1$tests = Get-ChildItem -Path /workspace/tests -Filter *.Tests.ps1

2

3$prompt = 'All HaveParameter tests must be grouped into ONE It block titled "has all the required parameters". Like this:

4

5 It "has all the required parameters" {

6 $requiredParameters = @(

7 "SqlInstance",

8 "SqlCredential"

9 )

10 foreach ($param in $requiredParameters) {

11 $CommandUnderTest | Should -HaveParameter $param

12 }

13 }'

14

15foreach ($test in $tests) {

16 aider --message "$prompt" --file $test.FullName --model azure/gpt-4o-mini --no-stream

17}

THIS WORKED! Oh my god, do you know how long it would have taken me to do that for 700 files, all by myself?

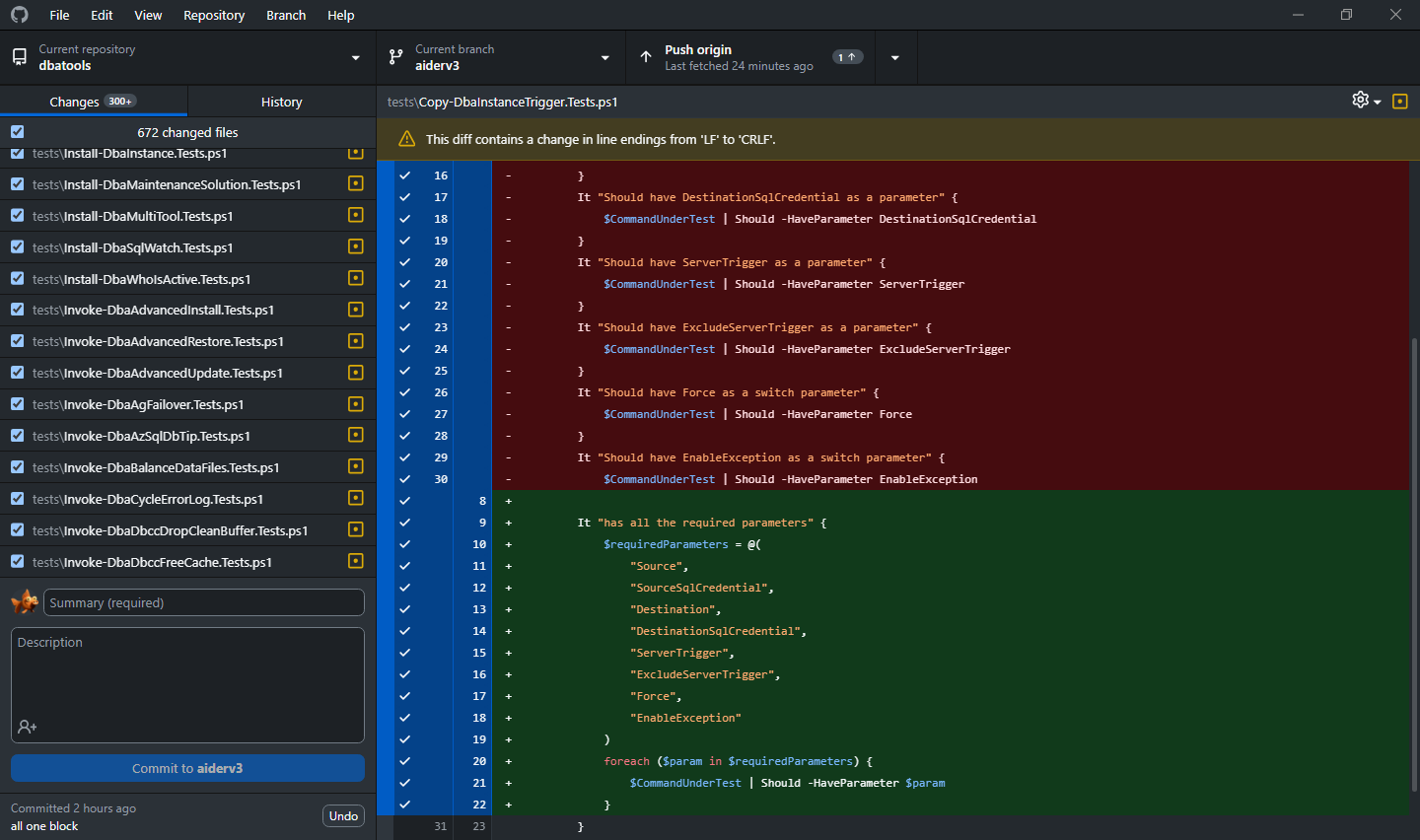

As you can see in the screenshot of the git diff, gpt-4o-mini is surprisingly smart for tasks that are very limited in scope. It removed the old code format, kept the same parameters, and rewrote the code using my suggested style.

For modifying huge blocks of code

When you want something as complex as a Pester v4 to Pester v5 rewrite, that takes an incredibly smart engine and will cost a non-trivial amount of money.

OpenAI o1-preview is TOP but unaffordable

The aider leaderboard is right: OpenAI o1-preview is THE BEST at rewriting Pester v4 to Pester v5. It's wildly accurate but also wildly expensive. I'm talking like 70 cents per command. It also takes a very long time, averaging 40 seconds per command.

Interestingly, resellers like openrouter.ai are the only places that allow you to use o1-preview. OpenAI doesn't offer it, or at least I didn't see it on the consumer plan. And with Azure, you have to be approved, which my account is not. OpenRouter is basically a convenient reseller, but there's a whopping 10% surcharge, so I'll only be using it when I'm feeling impulsive.

Anthropic Sonnet is incredibly smart and still affordable

A good alternative is Sonnet. AI works best when it has lots of context and all of Claude's engines (except haiku, which I never use) are exceptional at just getting it. It was trained on high-quality data and feels intelligent. It always knows what I want.

With prompt caching, my rewrites dropped from 7-10 cents to 2-4 cents per command. It caches static information like PowerShell best practices, so you don't waste tokens on repetitive instructions.

You can send your changing prompt (like "now rewrite this file: $filename") and include static prompts/best practices/lessons learned as read-only files. In aider, you'd use the --read parameter for this. While the prompt portion that actually changes is --message.

1$prompt = "here are the updated parameter names, please fix according to the attached standards: SqlInstasnce, SqlCredential, etc etc"

2$filetochange = "/workspace/tests/Backup-DbaDatabase.Tests.ps1"

3$conventions = "/workspace/tests/conventions.md"

4

5aider --message $prompt --file $filetochange --read $conventions --no-stream --cache-prompts

I spent $21 on a full rewrite that took 6+ hours. Went to bed, woke up to find I'd hit my daily limit. Got an Azure sub but no Anthropic models there :( So I signed up for OpenRouter, mentioned earlier.

OpenAI 4o didn't work wtf

I am still surprised that 4o was too inaccurate to use. It replaced my Pester tests with unusable garbage and made unintelligent assumptions.

For tiny tasks

While OpenAI 4o wasn't good for a whole file rewrite, the incredibly affordable 4o-mini is amazing for singular tasks. This is where I see myself using mini models the most.

One file costs between $0.007 and $0.0012 to process and takes anywhere from 5 to 20 seconds to process, depending on the size of the file.

So far I've tried:

- Smart find and replace for short .NET type names with full .NET type names

- Removing comments

- Turning a few blocks of code into one gorgeous block of code, as seen above

I will say that it's not perfect but it'll get you mostly there. It does mess up when there's just too much data. Like it's struggling with Restore-DbaDatabase.Tests.ps1 because there's over 50 tests in there. So for those one-offs, I'll use 4o which I'm sure is great for one off tasks like this. Though again, not totally complex tasks that require original thought.

When writing for these tiny tasks, try to keep your prompts as tight as possible. And just do one thing at a time.

I'm not yet done scaffolding dbatools with aider but if you want to see my branch, you can see it here: aiderv3. I have one config file in the root and one .aider folder with everything else.

For huge tasks

Again, Sonnet is best at this very moment in time but also, you really do have to spend a lot of time figuring out your exact prompts. That means having it run on various files, seeing what it did wrong then add in additional instructions telling it what to do instead of that wrong thing.

I still had not perfected my prompt before doing my $21 rewrite, but re-rewriting it bit-by-bit with mini is working for me so far.

Also, don't do a lot at once. At first, I was trying to do batches of 3 but found the most success when I gave it one command at a time to focus on and rewrite.

Automatic testing

By the way, aider does do an amazing thing where it can automatically test your code and basically get it to the point where it passes tests. My environment isn't setup well yet but once it is, I'll add it to the mix. Once I do, I'll write about it here.

Update!

My buddy Gilbert Sanchez showed me a better way to write the Pester test so that the failure actually lets you know which parameter failed. No problem, I'll just run aider again!

1$tests = Get-ChildItem -Path /workspace/tests -Filter *.Tests.ps1

2

3$prompt = 'HaveParameter tests must be structured exactly like this:

4

5 $params = @(

6 "SqlInstance",

7 "SqlCredential"

8 )

9 It "has the required parameter: <_>" -ForEach $params {

10 $CommandUnderTest | Should -HaveParameter $_

11 }'

12

13

14foreach ($test in $tests) {

15 Write-Output "Processing $test"

16 aider --message "$prompt" --file $test.FullName --model azure/gpt-4o-mini --no-stream

17}

It took a bit to get the prompt working consistently for all commands. At first I was describing the change then giving it an example but it's most effective just saying "do it exactly like this" and then showing it.