Local models mildly demystified

If you're like me and have a hard time wrapping your mind around how AI works, Microsoft's AI Toolkit for Visual Studio Code makes it unusually approachable. Recent versions work on Windows, Linux AND macOS too!

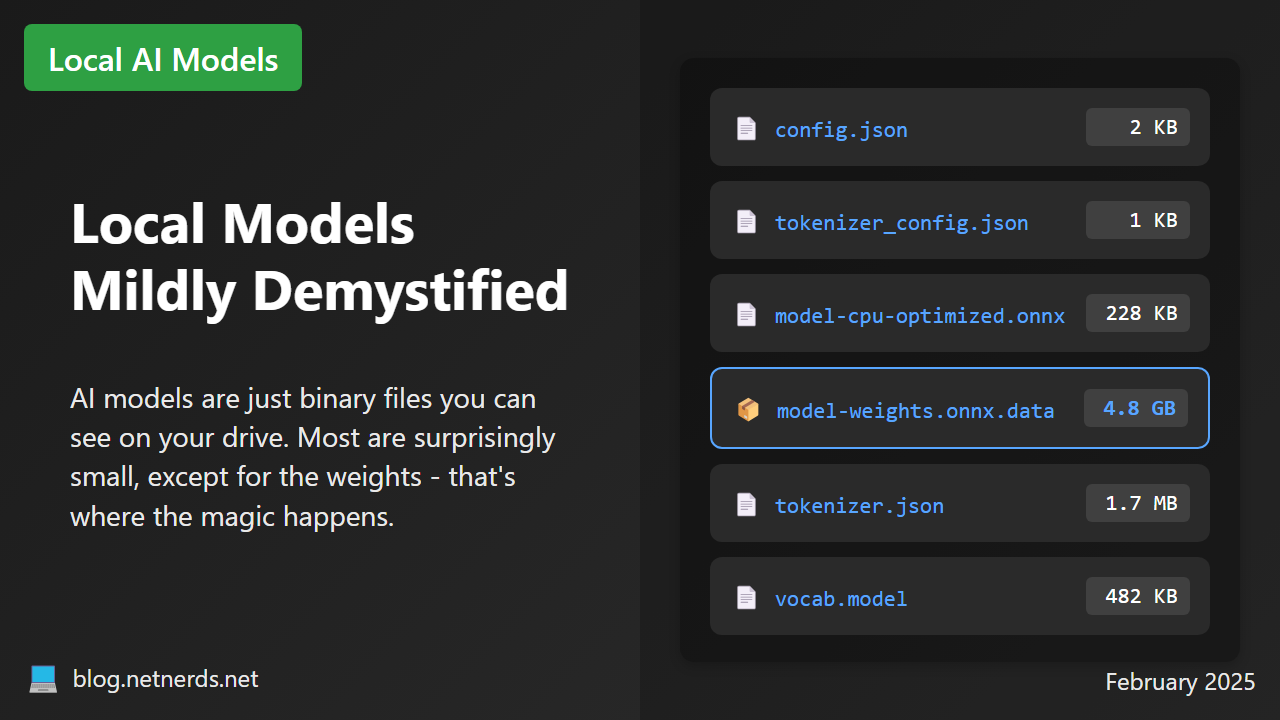

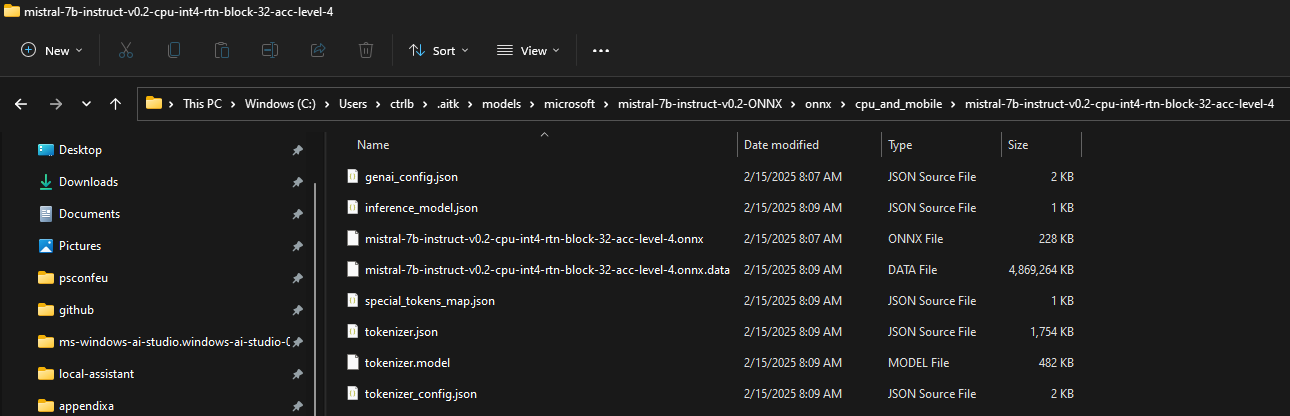

I didn't expect LLM engines to be "just" binary files, but the VS Code extension helped me better understand how LLMs work by letting me download and run AI models locally. I even got to SEE the models on my drive.

Prior to trying the AI Toolkit, I tried jan.ai and other apps like LM Studio that let users run models locally, but I kept selecting models that were way too big for my machine. I had no idea how it all worked, maxed out all of my resources and brought my VM to its knees.

So when I first found AI Toolkit, I especially appreciated that it offered a super limited selection of models that work on most computers and I haven't destroyed my machine since. I actually found it almost a year ago when it ONLY ran local models but now, AI Toolkit works with both local and remote models. Check out my earlier posts (linked at the bottom) for more info on AI Toolkit and GitHub Models.

Running models on my machine and watching my RAM and CPU usage spike made me curious about how it all worked, so I asked claude.ai to explain it using SQL Server, .NET and PowerShell comparisons. For .NET, Claude explained that loading a model is similar to deserializing an object from storage, and that many machine learning libraries in .NET, like ML.NET, provide methods to load pre-trained models from files or streams.

For PowerShell, Claude compared it to loading a module into memory. This didn't click for me, so I asked for code examples which made it clearer:

1# Add the necessary assembly references for ML.NET

2Add-Type -Path ./Microsoft.ML.dll

3

4# Create an MLContext

5$mlContext = New-Object Microsoft.ML.MLContext

6

7# Load the trained model from a file

8$modelInputSchema = [ref]::new()

9$trainedModel = $mlContext.Model.Load("./model.zip", [ref]$modelInputSchema)

Oh, it's like loading a text file or DLL! That makes sense.

What's Inside AI Model Files?

As an infrastructure person, I probably won't create production models soon, though maybe I'm wrong. Still, I wanted to know what was "inside" of these binary files and found out that they don't contain human-readable content like words or code, which was expected. Instead, they hold serialized data structures that represent the model's parameters and architecture. The insides typically include:

| Component | Description | Simple Explanation |

|---|---|---|

| Weights and Biases | Learned parameter values stored as binary floating-point numbers | These are the "answers" the model has learned during training - like how strongly certain patterns should influence predictions. Think of them as the model's memory or knowledge. These make up the bulk of model file size. |

| Model Architecture | Neural network structure details (layers, types, activation functions) | The "blueprint" of how the model is structured - like a recipe or building plan that defines how information flows through the system. This is usually quite small in file size. |

| Metadata | Training configuration, optimizer state, and versioning information | Extra details about how the model was created and should be used - similar to documentation that comes with software. |

| Serialization Format | Framework-specific data storage methods (TensorFlow: Protocol Buffers, PyTorch: Pickle, ONNX: specific protobuf format) | How all this information gets packed into files - like different compression formats for different software platforms. |

Larger models with more parameters generally work better because they're more intelligent, but they also need WAY more RAM and processing power - which explains why some models crashed my machine. I just picked models that vastly outsized my machine.

Viewing the Contents

Using cat on these files won't show readable text since they're binary. To inspect or use them, you need the appropriate machine learning framework:

- TensorFlow:

tf.keras.models.load_model()ortf.saved_model.load() - PyTorch:

torch.load() - ONNX:

onnx.load()

Claude told me that this is how you might load a PyTorch model and inspect its parameters, but I haven't tried. The code helped bring it all together in my mind, though.

1import torch

2

3# Load the model

4model = torch.load('model_file.bin')

5

6# Print the model architecture

7print(model)

8

9# Inspect specific parameters

10for name, param in model.named_parameters():

11 print(name, param.shape)

For architecture or weight inspection without running the model, tools like netron can visualize models stored in various formats. This isn't super interesting to me, beyond seeing it once, so here it is:

While I'm not yet building my own models, understanding that they're essentially binary files with structured data helps make AI feel more approachable. Microsoft's toolkit gives an easy way to experiment with AI without deep machine learning expertise.

This post is part of my AI Integration for Automation Engineers series. If you found it helpful, check out these other posts:

- AI Automation with AI Toolkit for VS Code and GitHub Models: A visual guide

- Getting Started with AI for PowerShell Developers: PSOpenAI and GitHub Models

- Asking Tiny Questions: Local LLMs for PowerShell Devs

- Local Models Mildly Demystified

- Asking Bigger Questions: Remote LLMs for Automation Engineers

- PDF Text to SQL Data: Using OpenAI's Structured Output with PSOpenAI

- Automating Document Classification in SharePoint with Power Platform and AI

- Document Intelligence with Azure Functions: A Practical Implementation

And as always, if you're interested in diving deeper into these topics, my upcoming AI book covers everything from local models to advanced automation techniques. Use code gaipbl45 for 45% off.