Automating Document Classification in SharePoint with Power Platform and AI

Getting users to enter metadata is THE challenge when managing SharePoint document libraries. The moment we set up custom columns for proper document classification, we create a burden for our users who just want to upload files and move on. As an on-again/off-again SharePoint dev, I find metadata super useful but even I don't want to fill out 10 fields just to upload a document to SharePoint.

We need these descriptions, though, because they help with search, filtering, compliance, and a million other things. It's been the same challenge ever since I got into SharePoint when it first came out: we need the data, users refuse to provide it, and each side has legitimate points.

This is where AI can be genuinely useful and exactly what I've wanted for as long as I can remember.

After understanding how AI and structured output worked, I developed a SharePoint Auto Categorizer using Microsoft Power Automate and an Azure Function that classifies documents, extracts metadata, and updates SharePoint properties AUTOMATICALLY!

This post continues my series on practical AI applications. In my post about tiny questions, I explored using local LLMs with PowerShell for simple tasks. Then in my post about bigger questions, I showed how cloud models can handle more complex data like command outputs. Today, we're applying these concepts to document management with SharePoint.

Unlike the MP3 filename cleaning in my first post or the command output parsing in my second, document classification requires comprehensive analysis of entire documents - something that demands the larger context windows and processing power that cloud models provide.

How it works

The workflow is straightforward:

- User uploads a document to SharePoint

- Power Automate detects the new file

- The file gets sent to an Azure Function

- The Azure Function extracts text and uses AI to identify relevant metadata

- Power Automate updates the SharePoint properties with the extracted data

The beauty is that users just upload files like they always wanted to, but we still get properly classified documents. Even better, the AI is consistent and thorough - no more rushed tagging jobs where half the fields are blank or contain "N/A".

Setting up the SharePoint structure

First, we need a document library with custom columns to store our metadata:

- Create a document library in SharePoint

- Add these columns:

- Case Number (Single line of text)

- Document Type (Choice: Case Brief, Legal Opinion, Contract, etc.)

- Jurisdiction (Single line of text)

- Keywords (Multiple lines of text)

- Parties Involved (Multiple lines of text)

- Area of Law (Choice: Criminal Law, Civil Law, etc.)

Nothing fancy here - just the standard SharePoint columns you'd create for any document management system.

Building the Power Automate flow

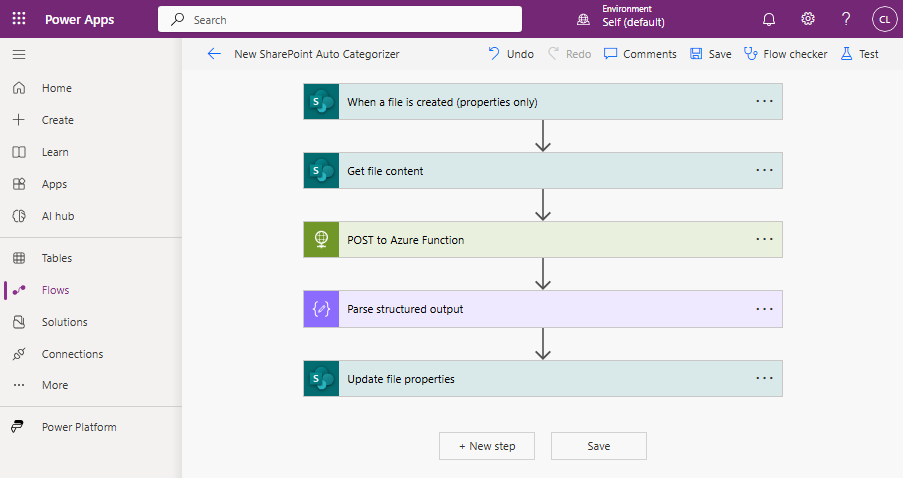

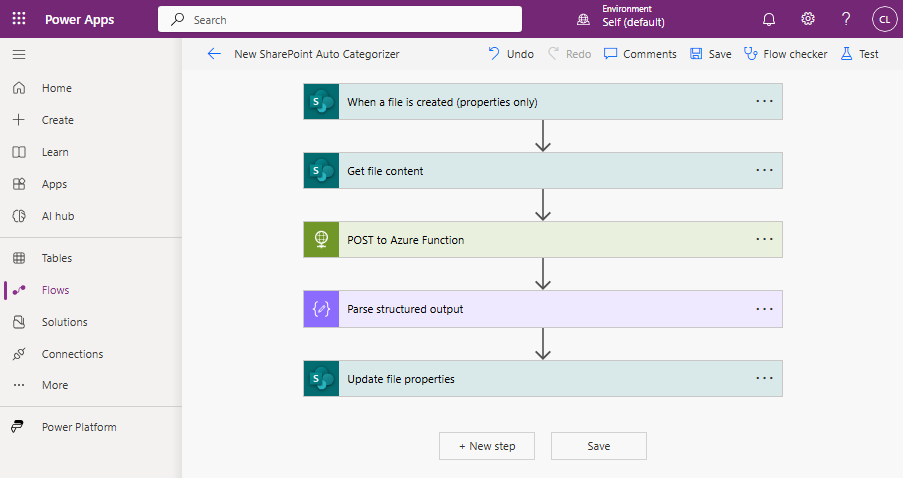

Next, we need to build a flow that processes files as they're uploaded:

Step 1: Create and configure the trigger

- Create a new Automated cloud flow

- Select the "When a file is created (properties only)" trigger

- Set your SharePoint site and library name

Step 2: Get the file content

- Add a Get file content action

- Select the same site

- For File Identifier, use the ID from the trigger

Step 3: Process the file with Azure Functions

- Add an HTTP action

- Set Method to POST

- Enter your Azure Function URL

- Add headers:

1Content-Type: application/octet-stream 2x-openai-model: gpt-4o - Set the body to the file content from Step 2

Step 4: Handle the response

- Add a Parse JSON action

- Use the output from the HTTP request

- Define a schema matching your Azure Function's response

Step 5: Update the SharePoint properties

- Add an Update file properties action

- Select your site and library

- ID should be the ID from the trigger

- Map each SharePoint column to the corresponding values from the parsed JSON

Here's what the core flow looks like:

What happens behind the scenes

When the flow runs, it sends the document to our Azure Function which:

- Extracts text from the document (PDF, Word, etc.)

- Sends that text to an AI model (I'm using GPT-4o-mini)

- Returns structured metadata in a consistent format

I've configured the AI to act as a document classifier and didn't even give it specific knowledge of legal documents because it already knows.

The function analyzes the content and identifies the case number, document type, jurisdiction, etc.

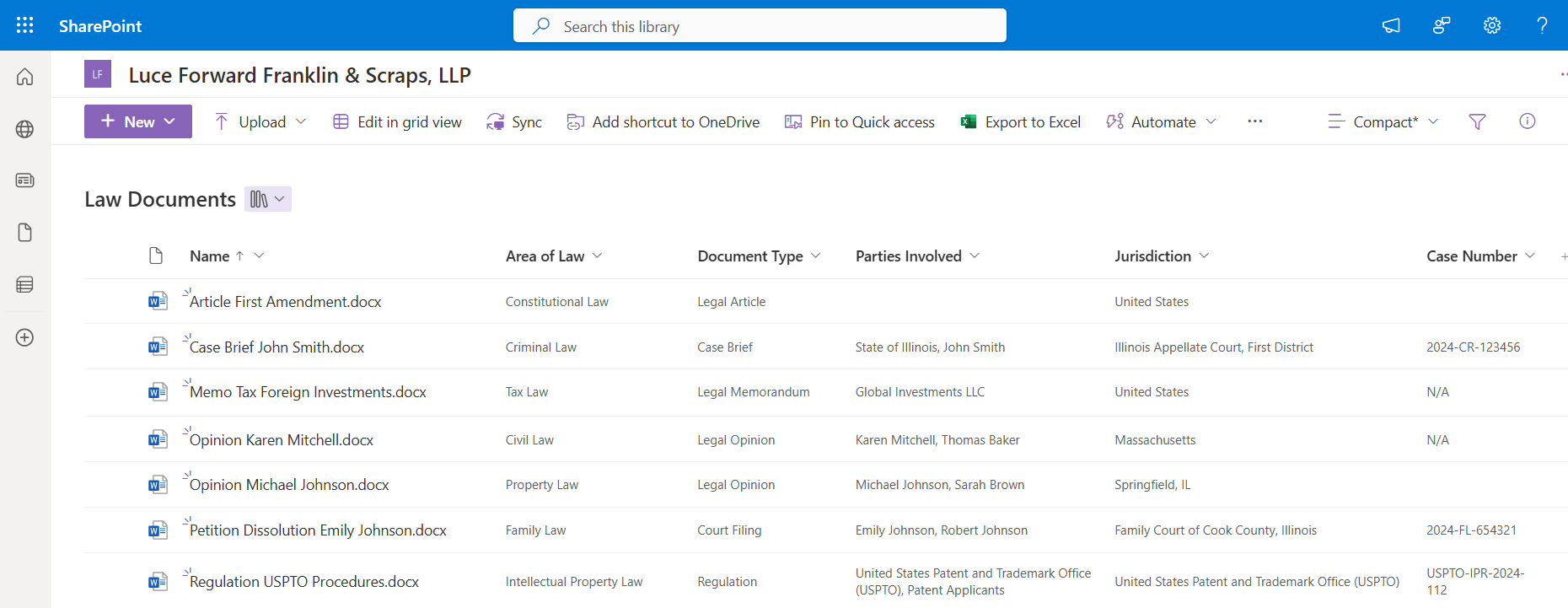

Results and benefits

After implementing this system:

- Documents are properly categorized without user effort

- Metadata is consistent across all documents

- Search and filtering work reliably

- Users are happy because they don't have to fill out forms

- Administrators are happy because documents are properly classified

Just look at this

The real magic is that the AI isn't just looking for exact matches or keywords - it understands the document. It can identify a contract even if the word "contract" never appears explicitly. It can extract parties based on context, not just by looking for names.

Comparing OpenAI's gpt-4o-mini and Microsoft Syntex for SharePoint Document Classification

While building this system, I also looked into Microsoft's Syntex, which is their SharePoint solution for AI-powered document classification. If you're trying to decide whether to build a custom AI pipeline with OpenAI or use Syntex, here's a breakdown of the differences.

Cost Comparison

| Service | Cost per unit |

|---|---|

| OpenAI gpt-4o-mini | $0.15 per 1M input tokens, $0.60 per 1M output tokens |

| Microsoft Syntex | $0.005 per page processed |

To put this into perspective, I ran a one-page legal opinion through OpenAI's API and estimated that it required 275 tokens. That means processing that document with gpt-4o-mini costs about $0.00004125 for input, plus potential output costs. The same document processed through Syntex would be $0.005 per page, making Syntex about 120x more expensive for this specific document. However, costs scale differently depending on usage.

Accuracy & Customization

- OpenAI gpt-4o-mini: Provides flexible, highly customizable document classification. You can tailor the AI's behavior by writing specific prompts, fine-tuning, or integrating additional logic in your Azure Function.

- Microsoft Syntex: Designed specifically for SharePoint, with built-in AI models for classifying documents, extracting metadata, and applying structured information directly to SharePoint columns.

Speaking of accuracy, I often get questions about AI accuracy. As I discussed in my earlier post on extracting PDF data into SQL using structured output, AI isn't perfect, but neither are humans. People get tired, bored, or distracted when categorizing documents, especially with large volumes. Even highly trained professionals struggle with consistent categorization. While I haven't conducted formal testing myself, it's reasonable to expect that AI models could provide more reliable and consistent results than manual processes, particularly for repetitive tasks. The primary advantage seems to be that AI applies the same logic consistently without fatigue - something that's nearly impossible for humans working with large document sets.

Integration & Ease of Use

- OpenAI gpt-4o-mini: Requires setting up Power Automate + Azure Functions, managing API calls, and handling authentication. However, it gives full control over AI behavior and metadata structure.

- Microsoft Syntex: Seamlessly integrates with SharePoint without needing Power Automate or custom development. However, customization options are more limited, and it relies on Microsoft's pre-built models.

When to Choose Which?

| Use Case | Best Option |

|---|---|

| You need full control over AI logic, customization, or external APIs | OpenAI gpt-4o-mini |

| You want an out-of-the-box SharePoint solution with minimal setup | Microsoft Syntex |

| Your documents are complex, long, or require deep contextual understanding | OpenAI gpt-4o-mini |

| You want to avoid API calls and prefer a Microsoft-native approach | Microsoft Syntex |

Privacy & Alternative AI Options

Considering privacy requirements, I use Microsoft's OpenAI platform in Azure because it focuses on security, confidentiality, and privacy. Basically, I trust it.

That said, you don't have to use OpenAI—there are other solid options out there. So far, I've found Sonnet (from Anthropic) and OpenAI to be the most accurate. Sonnet runs great on AWS Bedrock, making it a good alternative if you're already deep into the AWS ecosystem. Depending on your infrastructure and privacy needs, either of these options can work well for SharePoint document classification.

Final Thoughts

If you're looking for maximum flexibility, using your own API provides more control and is significantly cheaper per document at small scales. However, if you prefer a simple, native SharePoint solution and don't need extensive customization, Syntex is the easier choice.

Ultimately, both solutions remove the burden of manual metadata entry—pick the one that fits your architecture and cost model best.

Next steps

This post showed how to configure the SharePoint and Power Automate parts of the solution. In my follow-up post on Document Intelligence with Azure Functions, I detail the Azure Function that powers the AI processing - how it extracts text, interacts with OpenAI's API, and returns structured data.

If you've struggled with SharePoint metadata (and who hasn't?), this approach offers a way forward that satisfies both users and administrators. Users get to do what they've always wanted - just upload files and move on - while you still get properly organized documents.

This post is part of my AI Integration for Automation Engineers series. If you found it helpful, check out these other posts:

- AI Automation with AI Toolkit for VS Code and GitHub Models: A visual guide

- Getting Started with AI for PowerShell Developers: PSOpenAI and GitHub Models

- Asking Tiny Questions: Local LLMs for PowerShell Devs

- Local Models Mildly Demystified

- Asking Bigger Questions: Remote LLMs for Automation Engineers

- PDF Text to SQL Data: Using OpenAI's Structured Output with PSOpenAI

- Automating Document Classification in SharePoint with Power Platform and AI

- Document Intelligence with Azure Functions: A Practical Implementation

Also, if you liked the content in this blog post, most of it came from my research for my upcoming AI book, published by Manning. You can use the code gaipbl45 to get 45% off.