Update your blog with an AI CLI (No, really)

We have hundreds of blog posts on dbatools, dating back to 2016. Links rot. Commands change. Screenshots turn into ancient Windows PowerShell blue screens that look embarrassing in 2025.

I'd never have the time or interest to do this...unless my only task was to craft an effective prompt and it looks like I pulled it off!

The problem with blog maintenance

After I rebuilt dbatools.io with AI and a dream, I had another problem: the actual blog content. Years of posts with outdated information, broken links, old screenshots, and examples that might not work anymore with current dbatools versions.

I tried to maintain this stuff manually over the years. I'd fix a post here, update a screenshot there. It never kept up. There was always something more important to work on and updating blog posts isn't very interesting or creative.

And I can't do it with straight PowerShell because true blog maintenance requires judgment. It's not find-and-replace. You can't just run a script that says "update all the links" because some links should stay historical. Maybe a screenshot should be preserved because they show what the tool looked like at that moment. Some outdated information is actually correct in context.

And also! There are a lot of posts. Not just on dbatools but also here on my personal blog. Manual blog maintenance was never going to be on my agenda until AI.

Why this is possible now

Incredibly intelligent, cloud-hosted AI models can handle long, detailed prompts without falling apart. Also, agentic CLIs became available and they can do things like SEARCH THE WEB to find a new location of a blog post or social media handle. What a dream.

After using Claude Code CLI so successfully to migrate dbatools tests from Pester v4 to v5, I figured I could update not just dbatools.io but also the content as well. I knew it'd take finesse but I worked it all out with Claude to great success.

For the prompt, I wrote a massive instruction document for updating blog posts. It's pages long. It covers:

- When to preserve historical content vs update it

- How to handle broken links

- Converting old PowerShell screenshots to modern shortcodes

- When to modernize code examples (and when not to)

- Dealing with outdated Twitter embeds

- Technical accuracy for timeless topics vs dated announcements

The instructions themselves required nuance. I had to tell it things like: "Update 'coming soon' references if those features have shipped, but leave version numbers and download counts alone—those are historical facts."

I couldn't believe when a lesser intelligent model, Haiku 4.5, handled it. All of it.

What it actually did

I pointed Claude Code at my blog repository, gave it the instruction document, and piped in 100+ markdown files.

Here's what it found and fixed (see the full commit):

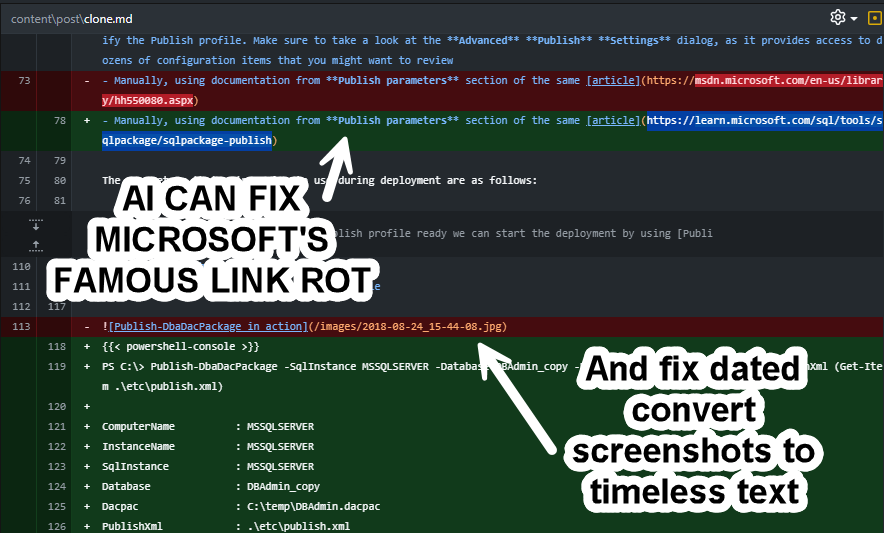

Link rot: Hundreds of dead links. Microsoft reorganized their docs multiple times since 2016. Twitter links that now go nowhere because everybody left plus I don't want to give it additional traffic. GitHub paths that changed. It tested every link and updated or removed them.

Outdated screenshots: This was the big one. Old PowerShell console screenshots—you know the ones, that classic blue Windows PowerShell look—got extracted and converted to our new console shortcode format. The code and output from the images became properly formatted, syntax-highlighted text.

Twitter embeds: All those old tweet embeds? Gone. It converted them to brief paraphrased statements. Where it could find people's Bluesky profiles, it linked those instead.

Code examples: It checked whether commands still worked in current dbatools. Updated parameter names that had changed. Found places where splatting made sense and converted them—but only when appropriate, not mechanically.

Historical preservation: This is where it got interesting. It correctly identified posts that were historical announcements (like "dbatools 1.0 released!") and left the version numbers and statistics alone. Those weren't wrong—they were documenting what was true at that moment.

But for posts about ongoing practices (like how dbatools handles code signing), it went to the current dbatools repo, checked the actual current process, and updated the content to match reality.

The nuance problem

Even with pages of instructions, it still made judgment calls I wouldn't have made.

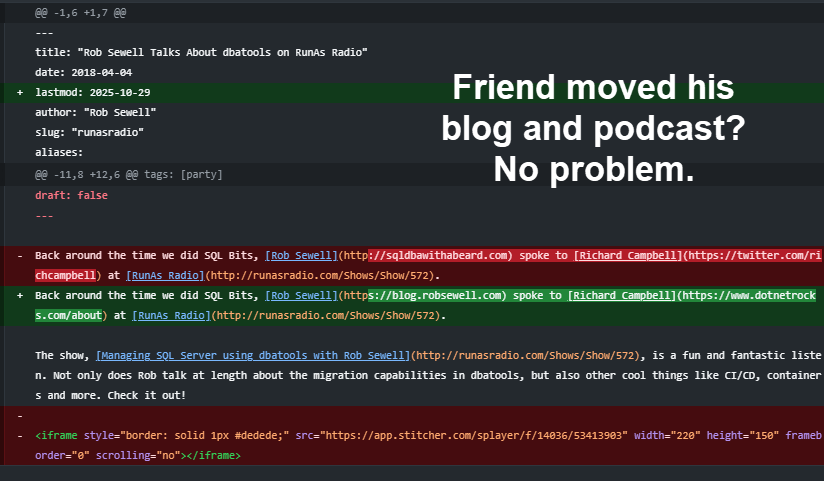

I found it had gone to GitHub and found drastically different websites for some of the people mentioned in old posts. Michael Lombardi's site changed completely. Warren Frame's too. It found the new sites and updated the links. Same for Rob and the podcast Rob was on, whose embed disappeared.

That's the kind of work I would never do manually—not because it's hard, but because it's tedious and requires checking every single person mentioned across hundreds of posts.

Something that would have been hard, too, is how do you encode "preserve the author's voice" in instructions? I tried. I wrote: "These posts are historical documents. Exercise judgment. When in doubt, preserve the original."

It got it all right enough for me. I skimmed every change in the git history and was just delighted.

Some changes were mechanical (fix broken link: good). Some required context (is this command still the best to use: depends). The instructions weren't exhaustive but somehow managed edge cases super well.

What this means

This isn't just about blog maintenance. It's about a whole type of work that never gets done because it's tedious but requires judgment.

Documentation that drifts out of date. Example code in READMEs that might not work with current versions. Internal wikis with broken links and outdated processes. All the maintenance work you know you should do but never have time for.

AI can handle this now—if you're willing to write the instructions and review the results.

The website rebuild was flashy. I rebuilt dbatools.io in a couple days and it looked impressive. But this blog maintenance work might matter more. How many projects have docs that are 80% correct but just old enough that you can't fully trust them? How many internal knowledge bases are slowly rotting because nobody has time to go through and fix everything?

Now we can actually fix that.

The process

I'm using Claude Code and a PowerShell module I wrote, called aitools, to process files in batches. The command looks like this:

1Get-ChildItem *.md | Invoke-AITool -Prompt .\prompts\blog-maintenance.md

That prompt file contains all the instructions. Claude Code reads it, processes each markdown file, makes the changes, and I review the diffs.

Some files need no changes. Some need extensive updates. The AI figures out which is which based on the instructions.

I'm also releasing the PowerShell module I mentioned before—aitools—that wraps multiple AI CLIs into a standardized interface. It handles Claude Code, Cursor, Aider, Gemini CLI, and others through the same PowerShell commands. Makes this kind of batch processing straightforward.

The cost

This work doesn't require expensive models. I used Haiku 4.5 for most of it, but Sonnet handles it wonderfully too. The point is you don't need to burn through your monthly limits on the biggest models for maintenance work.

The instructions matter more than which model you pick.

Try it yourself

If you've got a blog, documentation, or any content that needs maintenance:

- Use Claude or ChatGPT detailed instructions covering the edge cases you care about

- Test on a few files first

- Refine your instructions based on what it got wrong

- Process the rest

The instructions matter more than the model. I spent more time on that instruction document than on the actual processing. But once it's written, it works across hundreds of files.

And unlike manual maintenance, it actually gets done.

Don't have Claude Code? My next blog post will be about GitHub's Copilot CLI. Gemini also has a free tier as well.