Got a ChatGPT Subscription? Use It to Keep Your Blog Up-to-date

TL;DR: ChatGPT Pro ($20/month) gives you access to GPT-5 via Codex CLI which is perfect for automating blog updates. I used it on this very blog and was able to update about 50 blog posts before I hit the rate limit. After the rate limit is hit, I have to wait for up to 4 hours then start again. Skip the recommended Codex model (it's terrible) and GPT-5 minimal reasoning.

1Get-ChildItem *.md | Invoke-AITool -Prompt .\blog-refresh.md

Over the past few days, I wrote about updating blogs with Claude Code and using GitHub Copilot CLI for blog maintenance. But what if you've already got a ChatGPT subscription? You're paying $20/month for ChatGPT Pro anyway, might as well use it for batch blog updates.

The ChatGPT paid options

ChatGPT Plus and Pro give you access to GPT-5 and other advanced models through the Codex CLI.

Plus lets you process up to 90 posts before hitting rate limits (varies based on model reasoning settings and token usage). For larger blogs, you can pay for the $200/month plan, but honestly, if I had $200 to spend on AI, I'm putting it toward Claude Max 20x.

Model selection matters (a lot)

When you first run Codex CLI, it'll probably recommend its own "Codex" model. Do not use it. It was terrible for blog maintenance. It would rename image LINKS in my markdown to whatever it wanted but wouldn't actually rename the image files on disk. Way too many errors. Commands that didn't match what was possible. Just a mess.

What worked surprisingly well: GPT-5 with minimal reasoning.

I tried GPT-5 with low reasoning first and it took over 2 minutes per post. Then I tried minimal reasoning and got 20-40 seconds per post with about the same quality results. Note that if you do use higher reasoning, you'll run out of tokens sooner, so maybe you'll get thru 25 before it errors out. Something you can consider is using Start-Sleep for like 2-5 minutes between each post, then set it and forget it. Come back a few days later to a cleaned up blog.

To set the specific model, run codex, then type /model, and select GPT-5 and set reasoning effort to "minimal".

Here's what codex looks like when you do it right from the command line:

1# The manual way with all the flags

2codex exec -C C:\github\blog\content\post `

3 --dangerously-bypass-approvals-and-sandbox `

4 --config model_reasoning_effort="minimal" `

5 "Audit and update C:\blog\content\my-post.md. The blog covers various topics including dbatools, PowerShell, SQL Server, and technology..."

That works, but it's tedious. You have to remember the flags, the model config, the context directory. And if you want to process multiple files, you're writing a loop.

The easier way: Invoke-AITool

This is what I actually do. I use aitools because it handles all the scaffolding needed to batch process.

1# Install aitools

2Install-Module aitools

3

4# Set Codex as your default

5Set-AIToolDefault -Tool Codex

6

7# Process your posts

8Get-ChildItem content/post/*.md |

9 Invoke-AITool -Prompt .\prompts\blog-refresh.md

No flags to remember. No model configuration every time. Just pipe in your files and it processes them with fresh context each time.

The PowerShell pipeline support is what makes this practical. Each file gets processed independently with full context. Previous files don't pollute the current file's processing and the quality stays high across all 50 files.

Write your prompt once

Like I mentioned in my other posts, the instructions matter almost more than the model. I recommend adapting one of these:

Start with one of those and refine as you go. Tell the AI what to update, what to preserve, when to make judgment calls. Be specific about edge cases.

For example, I had to explicitly tell it: "Update 'coming soon' references if those features shipped, but leave version numbers alone—those are historical facts." Without that specificity, it would "fix" things that weren't wrong.

What to expect

With GPT-5 minimal reasoning and proper instructions, I got good results:

- Link rot fixed - Dead Microsoft docs, moved GitHub repos, Twitter links that go nowhere

- Screenshots converted - Old PowerShell console images extracted to text

- Code modernized - Where appropriate, not mechanically

- Historical context preserved - Version numbers and dates left alone when they documented what was true at that moment

Not as sophisticated as Claude's output that I used on dbatools, but totally solid for what you're already spending. I had to update nearly 500 blog posts on my personal blog and didn't want to exhaust my Cladude Code 5x Max weekly tokens.

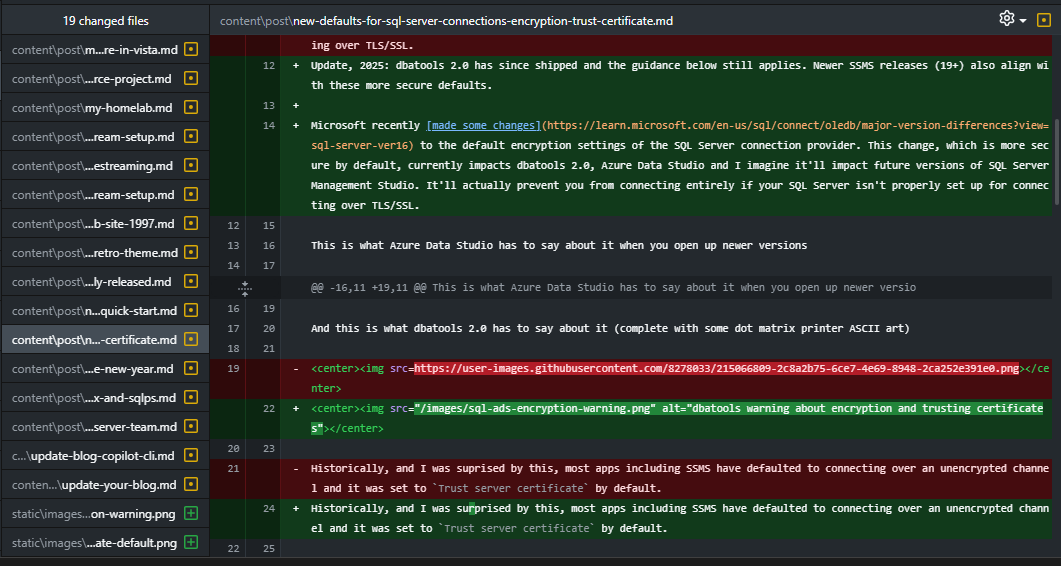

Here's a real example from one of my posts:

Codex downloaded an image that was hosted on githubusercontent, added a modernization note about encryption defaults, and even caught a typo while it was at it.

The rate limit reality

If you don't want to batch or wait longer, you've got options:

- Use Claude Code (my first choice, see this post)

- Try GitHub Copilot CLI if you've already got it at work (this post)

- Wait for the rate limits to reset and keep going

Skip the hassle

Don't want to deal with Codex CLI model configuration? Just use Invoke-AITool. It works with Claude Code, Codex CLI, GitHub Copilot, Gemini, Aider—whatever you've got installed. Consistent syntax, proper pipeline support, automatic context management.

That's what I use, and it's why I can pipe 50 markdown files through an AI and actually get quality results without babysitting the process.

Already paying for ChatGPT Pro? You might as well use it for blog maintenance. Just remember: GPT-5 minimal reasoning, not the Codex model. And if you want to make it easy, use aitools.

The blog maintenance you've been putting off for years? Now you can actually do it.