Update Your Blog with GitHub Copilot CLI

TL;DR: Use GitHub Copilot CLI to batch-update old blog posts—fix broken links, modernize code examples, convert screenshots to text.

1Get-ChildItem *.md | Invoke-AITool -Prompt .\blog-refresh.md

Write a prompt doc with your rules, run the command, review the diffs, commit what works.

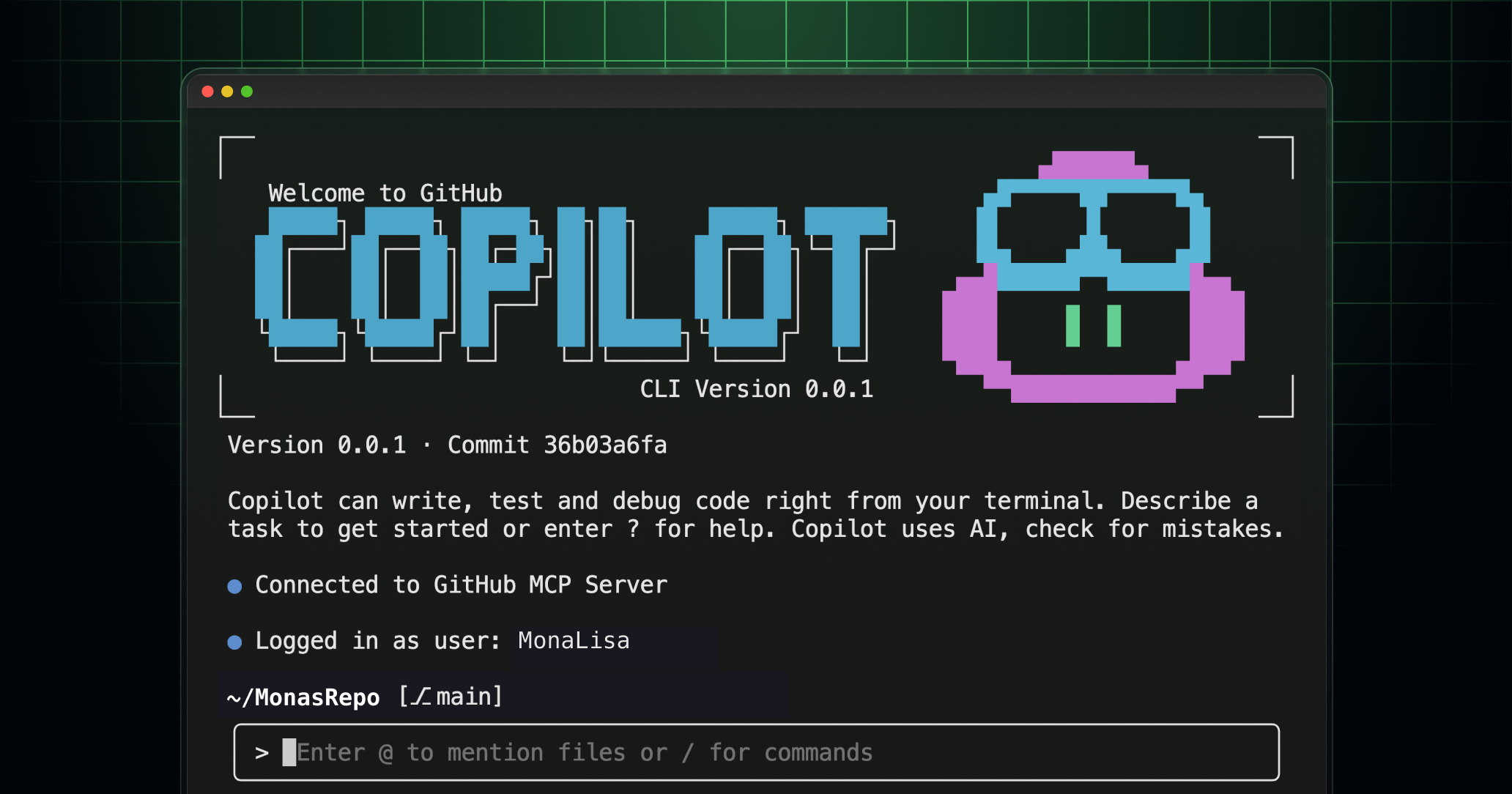

Yesterday I wrote about updating blog content with Claude Code. But what if your company uses GitHub Copilot instead? If you've got GitHub, Copilot's probably already available to you. Here's how to do the same blog maintenance work with GitHub Copilot CLI.

The problem

Blog posts from 2016 need different fixes than posts from 2023. Links rot at different rates—Microsoft docs got reorganized, Twitter links died completely, some GitHub repos moved. Screenshots age badly, especially the old Windows PowerShell blue console captures. Commands change—parameters get deprecated, best practices evolve.

You can't fix this with find-and-replace. Some links should stay historical. Some outdated information is actually correct in context. Some version numbers document what was true then. Manual review of every post was never going to happen, but AI can handle long, detailed prompts and make judgment calls about what needs updating versus what needs preserving.

Free tier

GitHub has a permanent free tier—50 premium requests per month, 2000 code completions, 50 chat messages. Each file you process counts as one premium request, so that's 50 blog posts per month, every month, forever.

Need more than 50? You can sign up for the 30-day free trial of Copilot Pro. That's 300 premium requests—enough for large blogs. After the trial, Pro is $10/month if you need it. That's half the cost of Claude Pro ($20/month).

When I'm presenting about AI, most people say they've got GitHub Copilot at work which is great news because it makes it possible to use CLIs like this or use AI-integrated VS Code extensions.

Getting started

You need two things:

- GitHub Copilot CLI

- Your blog repo with markdown files

The easiest way to get set up is with aitools, which handles installation, authentication, updates, and gives you a consistent interface across all AI CLIs:

1# Install aitools

2Install-Module aitools

3

4# Install GitHub Copilot CLI

5Install-AITool -Tool GitHubCopilot

Already have it installed? Keep it updated:

1Update-AITool -Tool GitHubCopilot

Don't want to use aitools? Install manually:

1# Install via npm

2npm install -g @github/copilot

3

4# Then authenticate

5copilot

The main advantage of aitools is consistent syntax across all AI CLIs (no more memorizing different flags), proper PowerShell pipeline support that processes files with fresh context each time, and automatic update management. Plus you can run the same prompt through multiple AI tools to compare results.

Choosing your model

Once you're set up, you need to decide which model to use. This matters because different models have different costs and capabilities—and GitHub Copilot CLI has specific limitations you should know about.

GitHub Copilot CLI currently supports these models: claude-sonnet-4.5, claude-sonnet-4, claude-haiku-4.5, and gpt-5. All CLI requests count as premium requests—there's no free tier option here. (GPT-4o is available in Copilot Chat, but not the CLI. Hopefully that changes.)

If you try copilot --model gpt-4o (a free model), you'll get an error about invalid model choices.

For blog maintenance, claude-sonnet-4.5 is what I'd pick. GPT-5 made mistakes that Sonnet caught. Haiku 4.5 is honestly good enough for this work—and if you're on the free tier with 50 requests, Haiku costs 0.3x, so you actually get 150 files processed instead of 50.

But if you're on the 30-day Pro trial, go Sonnet all the way. You've got 300 premium requests to burn through, and for a project where you're reviewing every change anyway, might as well use the best.

Now you need a prompt document with your maintenance rules.

Write your prompt

The instructions matter more than the model. I learned this processing all the dbatools posts—specificity wins.

I recommend just taking one of my prompts and adapting it as needed:

Start with one of those and refine as you go—you can even give Claude your existing prompt and say "now make this for my blog" and it'll adapt it. That's how I made my personal blog prompt -- it was based off of the dbatools prompt.

The nuance matters. I had to tell it: "Update 'coming soon' references if those features shipped, but leave version numbers alone—those are historical facts." Without that, it'd "fix" things that weren't wrong.

I even told it literally that it's a nuanced task.

The actual implementation

Once you've got your prompt figured out, you need to run it against your files. GitHub Copilot CLI can do this, but the @file syntax gets tedious when you're batch processing dozens of posts.

I use aitools because it handles the context management automatically. It starts fresh with every piped file, so quality stays super high. Previous files don't pollute the current file's context.

Plus, I got tired of looking up different flags for gh copilot, claude, gemini, etc. With aitools, I have consistent commands across all AI CLIs—GitHub Copilot, Claude Code, Gemini, Aider, whatever—plus proper error handling, predictable output, and PowerShell pipeline support.

1# Set GitHub Copilot as default

2Set-AIToolDefault -Tool GitHubCopilot

3

4# Process your posts

5Get-ChildItem content/post/*.md |

6 Invoke-AITool -Prompt .\prompts\blog-refresh.md

Don't have aitools? You can call Copilot CLI directly, but you need to follow specific patterns for how it loads file context:

1$prompt = Get-Content .\prompts\blog-refresh.md -Raw

2

3Get-ChildItem content/post/*.md | ForEach-Object {

4 Write-Host "Processing $($_.Name)..." -ForegroundColor Cyan

5

6 # Copilot needs the file reference FIRST with @ syntax

7 $message = "@$($_.FullName)`n`n$prompt"

8

9 # Pass message via parameter

10 copilot suggest --editor -p $message

11}

The @ syntax tells Copilot to load that file into context before reading your instructions. File reference first, then your prompt. That order matters.

The CLI shows you proposed changes before making them. Review each one.

What it found

I tested this on a batch of posts. Here's what it fixed:

Link rot everywhere. Microsoft reorganized their docs multiple times since 2016. Twitter links that now go nowhere. GitHub paths that changed. It tested every link and updated or removed them.

Those embarrassing blue screenshots. You know the ones—classic Windows PowerShell console captures from 2016. It extracted the commands and output as text. Not as sophisticated as Claude's extraction, but it worked.

Dead social embeds. Old tweet embeds got converted to brief paraphrased statements. Where it could find people's Bluesky profiles, it linked those instead.

Code that aged out. Parameter names that changed. Commands that needed splatting. It updated these, but only when appropriate—it didn't mechanically "modernize" everything.

Smart preservation. This is where it got interesting. It left version numbers alone in historical announcement posts. Those weren't wrong—they documented what was true at that moment. But for posts about ongoing practices, it checked the current repo and updated to match reality.

Beyond basic fixes

The real value shows up in the tedious work that requires judgment. When I used Claude Code on dbatools.io, it found drastically different websites for people mentioned in old posts and updated them. Copilot needs more explicit instructions—when I told it "check if author websites changed," it did—but both can handle the kind of meticulous work you'd never do manually.

This applies to any documentation that drifts: example code in READMEs that might not work with current versions, internal wikis with broken links and outdated processes, projects with docs that are 80% correct but old enough you can't fully trust them. The maintenance work you know you should do but never have time for.

Now you can actually fix it, even within the free tier if your blog isn't massive.

Try it yourself

Pick 50 old posts. Write instructions for what links to fix, what code to update, and what historical content to keep.

Run Copilot on those files. See what happens. Refine your instructions based on what it got wrong. Then scale up.

I maintained blogs manually for years and always fell behind. Now I pipe markdown through an AI CLI and review diffs. That's sustainable in a way manual maintenance never was.

Want to see how this worked with Claude Code? Check out my posts on rebuilding dbatools.io and the full blog maintenance workflow.