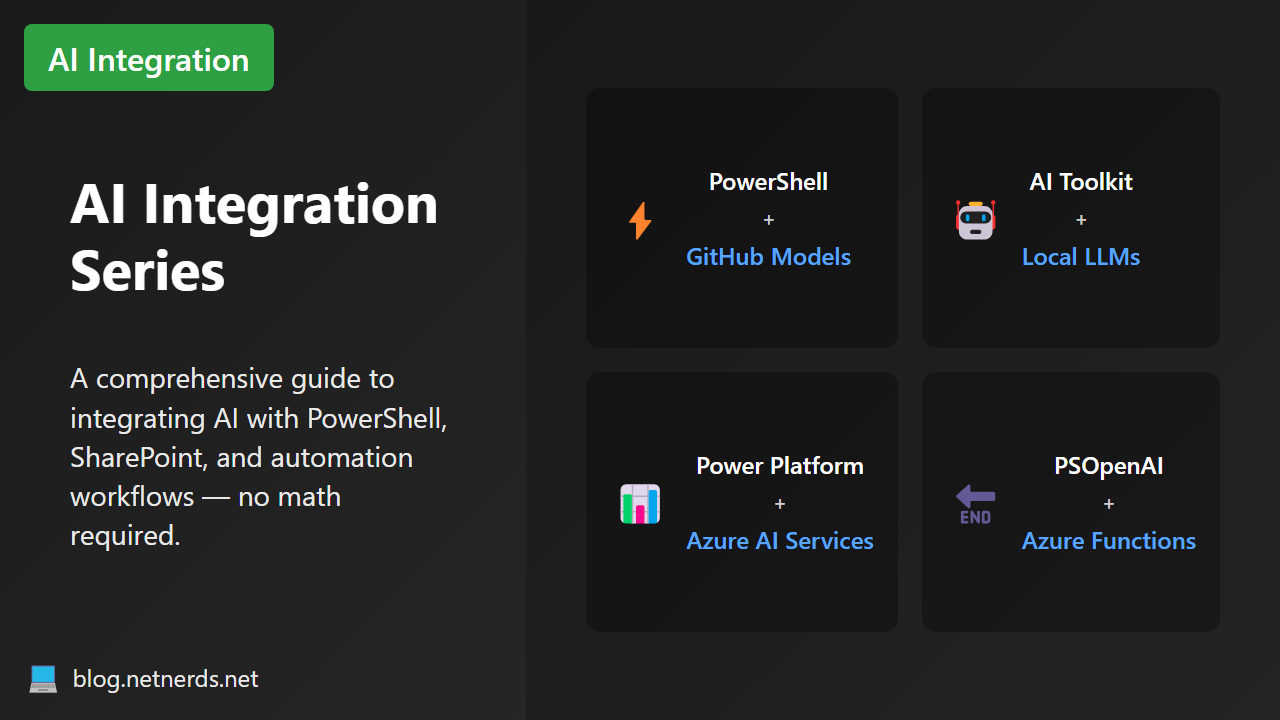

AI Integration Series

Lately, I've been knee-deep in AI automation, local and remote models, and their integration with PowerShell, SharePoint, and automation workflows. If you're curious about AI in practical IT automation, here's a quick rundown of my latest blog posts.

AI Automation with AI Toolkit for VS Code

Use GitHub Models and VS Code's AI Toolkit to convert unstructured CLI output into structured JSON with ease.

This guide covers:

- Installing the AI Toolkit extension

- Using Prompt Builder with models like

gpt-4o-mini - Creating a JSON schema to extract clean data from

netstatoutput - Seeing structured results side-by-side with the raw prompt

- Making AI-powered automation visual and beginner-friendly

Getting Started with AI for PowerShell

Build AI-enabled PowerShell modules and scripts — no math required.

This guide covers:

- Setting up GitHub Models, a free AI API with generous limits

- Installing the PSOpenAI PowerShell module

- Making your first API calls to

gpt-4o-mini - Using the

SystemMessageparameter for context-aware responses - Getting structured data back as usable PowerShell objects, no regex needed

Asking Tiny Questions: Local LLMs for PowerShell Devs

Small models, powerful answers. Use structured output with local LLMs to answer focused questions and clean data.

This guide covers:

- Running Ollama with models like LLaMA 3.1 and TinyLLaMA

- Asking true/false and single-object questions using PowerShell + REST

- Defining schemas for structured JSON output

- Extracting artist/song metadata from messy MP3 filenames

- Why tiny, atomic prompts produce better results on local models

Local Models, Mildly Demystified

Curious how local models work? This walkthrough makes it approachable for automation engineers.

This guide covers:

- How LLMs are just binary files

- The difference between weights, architecture, and metadata

- How AI Toolkit runs small, safe models on local machines

- Comparing model loading to .NET assemblies and PowerShell modules

- Why tiny models still do surprisingly smart things

Asking Bigger Questions: Remote LLMs for DevOps

When local models can't handle the load, remote models can parse full command output like netstat into structured data.

This guide covers:

- Using cloud models like

gpt-4o-minito handle bigger prompts - Building a

ConvertTo-ObjectPowerShell function that calls AI - Auto-generating JSON schemas with

Generate-OutputSchema - Parsing legacy command output (like

netstat,ipconfig, etc.) - Avoiding regex hell and getting PowerShell-native objects from plain text

PDF Text to SQL with Structured Output

Extract data from scanned PDF records and insert it straight into SQL Server — no manual parsing.

This guide covers:

- Creating structured output schemas for immunization records

- Extracting PDF content as markdown using OpenAI + PSOpenAI

- Parsing the markdown into structured JSON

- Inserting the resulting objects directly into SQL Server

- Handling real-world issues like hallucination, retry logic, and malformed text

Automating SharePoint with Power Platform + AI

Get perfect document metadata in SharePoint — without asking users to fill out a single field.

This guide covers:

- Using Power Automate to trigger on new SharePoint uploads

- Calling an Azure Function to extract and analyze document content

- Using

gpt-4o-minito auto-tag documents with type, parties, keywords, etc. - Updating SharePoint metadata without user input

- Comparing accuracy and pricing vs Microsoft Syntex

Document Intelligence with Azure Functions

A technical breakdown of the Azure Function that powers your SharePoint classifier and can be reused for anything.

This guide covers:

- Setting up an Azure Function that accepts documents via HTTP

- Extracting text from PDFs and Word files

- Sending that text to

gpt-4o-miniwith a structured schema - Returning clean, consistent JSON for downstream automation

- Reusing this setup across SharePoint, SQL Server, Teams, or anything else

I'll keep posting more about practical AI integrations in PowerShell, DevOps, and beyond. If any of these topics interest you, check them out and let me know what you think.